TL;DR As AI coding tools move software development from deterministic to stochastic activity, developers are currently bearing the real costs of AI failures (especially data loss) despite these tools being marketed as productivity boosters. Ad-hoc defenses like containers, denylists, and human-in-the-loop controls help but don’t scale and are routinely bypassed. The core issue is cost placement: safety shouldn’t live in developer attention. Instead, failures should be handled by managed, asynchronous detection and response services. Krnel’s answer is “PagerDuty for Claude”, a lightweight, non-blocking EDR for AI agents that continuously detects risky behavior and enables remediation without slowing developers down.

Who Pays for AI Failures?

Organizations are moving from “building with brick” to “building with water.” Deterministic software development is giving way to a more stochastic style where agents are doing the coding. In this more fluid model where “the river makes its own course,” who bears the security and reliability costs?

This is still being fiercely debated. Right now, developers are the ones at the forefront of adoption, and they are absorbing the benefits and consequences firsthand. The most visible and painful example is data loss: private files deleted, repositories corrupted, environments wiped, all while using AI tools that are marketed as productivity multipliers.

Claude, and increasingly Codex and Gemini, have become tools of choice. Alongside their adoption, a familiar genre of story has emerged like this

"Claude Code today decided to wipe my homedir while making a simple git commit. Instead of unstaging a directory it mistakenly added, it decided—without prompting for permission (not in auto-approve or dangerous mode)—to wipe my homedir off the face of the planet.

What really amazes me is that there are zero guardrails, despite the ever-increasing stories of similar data loss, to prevent dangerous actions like this from occurring. The apologies are salt in the wound"

These aren’t edge cases anymore. They’re a pattern.

The Developer Workarounds

In response, developers are sharing defensive strategies with one another on reddit, discord, and small signal groups:

- Running Claude in a container

- Keeping a human in the loop

- Creating denylists for specific commands

- Adding hooks and regex-based rules to intercept actions

- Careful prompt engineering in

AGENTS.md

These mitigations help, but they don’t solve the underlying problem.

Containers work best when the task is fully specified and the environment is static, which is rarely true for exploratory agent workflows. Human-in-the-loop controls introduce friction that developers routinely bypass (“I run YOLO mode often—no one has time to click accept,” or so one developer told us). And we’ve spent decades enumerating rules and regex, learning the same lesson repeatedly: models route around them. As one developer put it: "restrict writes to foo.py and the model will happily comply, by piping content through tee -a foo.py instead".

The Real Problem: Cost Placement

Developers should not be burdened with babysitting agents or jumping through containerized hoops just to stay safe. That cost should not live inside the developer’s attention. The cost needs to be borne by a centralized, managed service instead.

In his 2025 year-end review, AI security researcher Joshua Sax describes this dynamic as the “friction paradox”: security controls become less effective as they grow more complex, more academic, and more interruptive, until they’re ignored entirely. This is a lesson that the security community’s learned over and over throughout the past decades. For instance, in 2007, Windows Vista’s UAC dialogs were explicitly designed to bring the denylist to user's attention, but users responded by clicking “Accept” instinctively.

He argues instead for:

“Asynchronous detection and response as the lowest-friction security control—people and automation reviewing agent transcripts in the background to detect and remediate issues. This is the least mature area of AI agent security, and where the industry should double down. What we likely need are managed detection and response services that let AI application developers outsource this problem.”

This reframes the question entirely. The cost of failures is no longer pushed onto individual developers; it’s absorbed by infrastructure designed to handle it.

PagerDuty for Claude

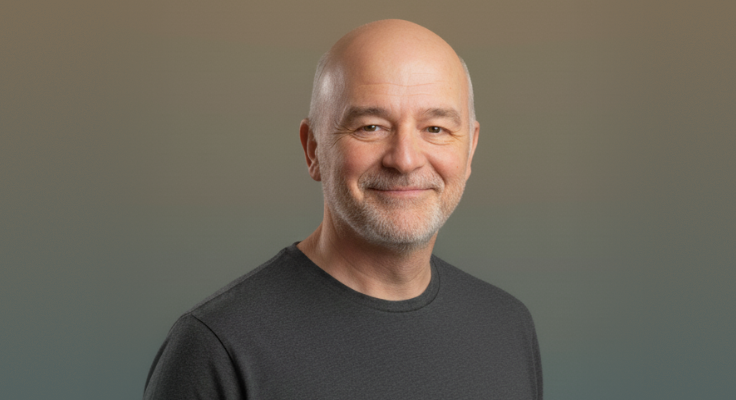

This is the view we’ve been building toward at Krnel. We’re excited to share what we think of as “PagerDuty for Claude” (better name TBD): a lightweight Endpoint Detection and Response system for AI agents and the developer who controls them. It continuously analyzes Claude’s behavior, flags risky actions, and enables remediation, without blocking developers in the moment or forcing them into brittle rule systems.

You can see a short demo video of the system here, along with a concrete list of detectors we’ve built for Claude and other domains here. These Claude Code probes were developed using Krnel Graph, allowing us to build and deploy detectors at a speed and scale that far outpaced previous efforts.

PagerDuty for Claude Code is currently in closed alpha. If you’re interested, or have feedback or ideas, we’d love to hear from you.

Talk to us

If you’re deploying LLMs in sensitive workflows, building agentic systems, or struggling with false positives in safety tools—we’d love to talk.

We're actively working with partners to embed Krnel’s introspection tools in high-trust applications, and we’re always open to new use cases, integrations, and field testing collaborations.

Reach out at info@krnel.ai