Technology Understand, control and build reliable agents by reading and writing their representations. Beyond inputs and outputs alone

Trust Economy

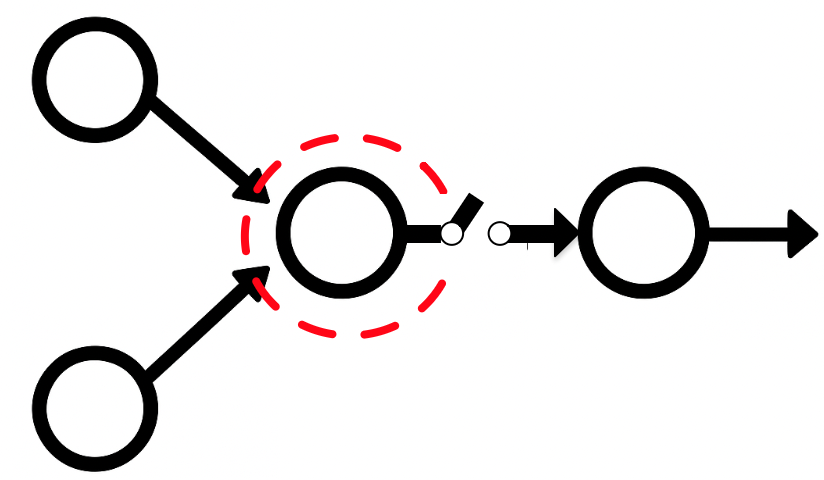

What tokens you get from an AI is only partially dependent on what tokens you feed it. There're a lot of other hidden ingredients in modern AI systems that affect the tokens they output in complex ways, making building secure and reliable AI applications challenging.

This makes using AI today a lot like eating from a can. Its convenient and we put a lot of trust on the label and the brand. But does it really have the ingredients it claims it does? What does it have inside that's not listed? How do your additions (prompts and contexts) interact with the ingredients and what happens as a result?

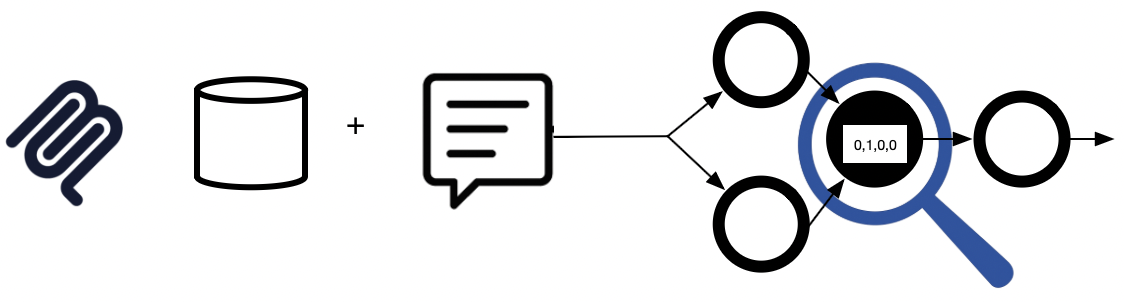

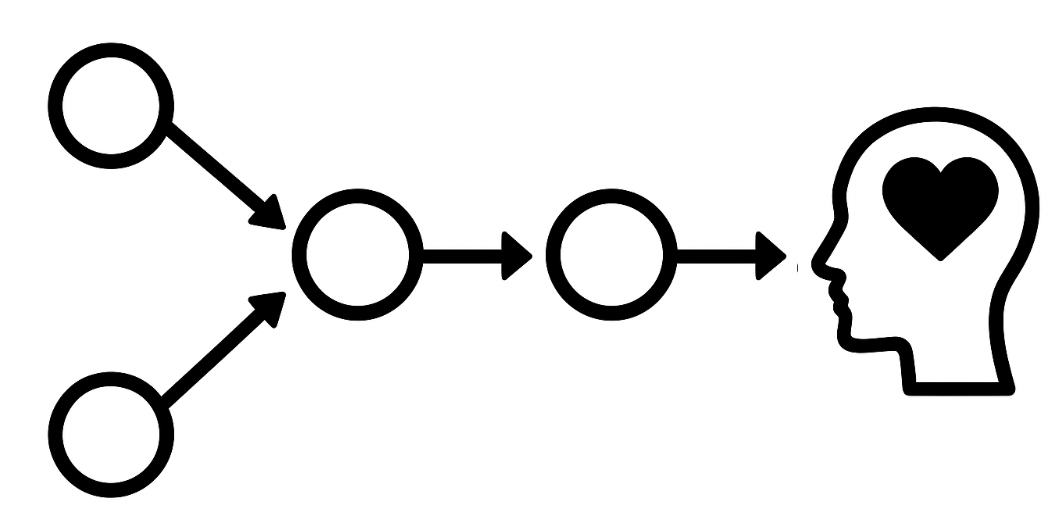

Krnel opens up agents and looks at their representations to reveal their internal beliefs, making it possible to explain, audit, and control how they think. This allows more secure and reliable agents

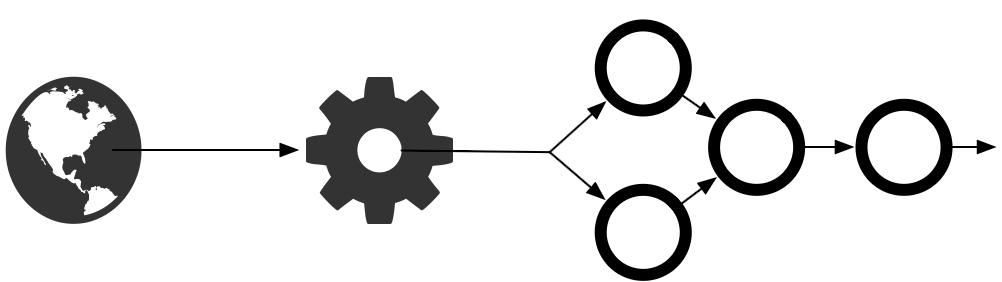

How we've controlled AI to date.

Model Layer

Control the AI by controlling its features. This was the art pre deep learning era.

Model Layer

Learn controls post training time (Instruct Fine Tuning and RLHF). Mostly the activities of frontier labs.

Application Layer

Control the AI by controlling its prompts.

Application Layer

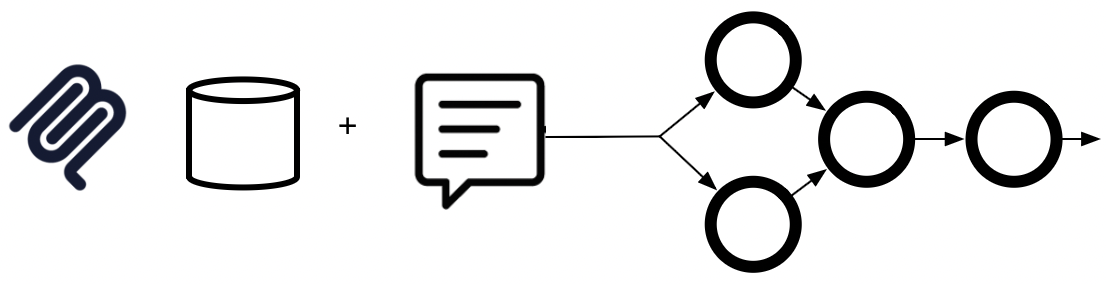

Control the AI by controlling its context (RAG/MCP) and prompts.

Application Layer

Control the AI through manipulating its internal representations.

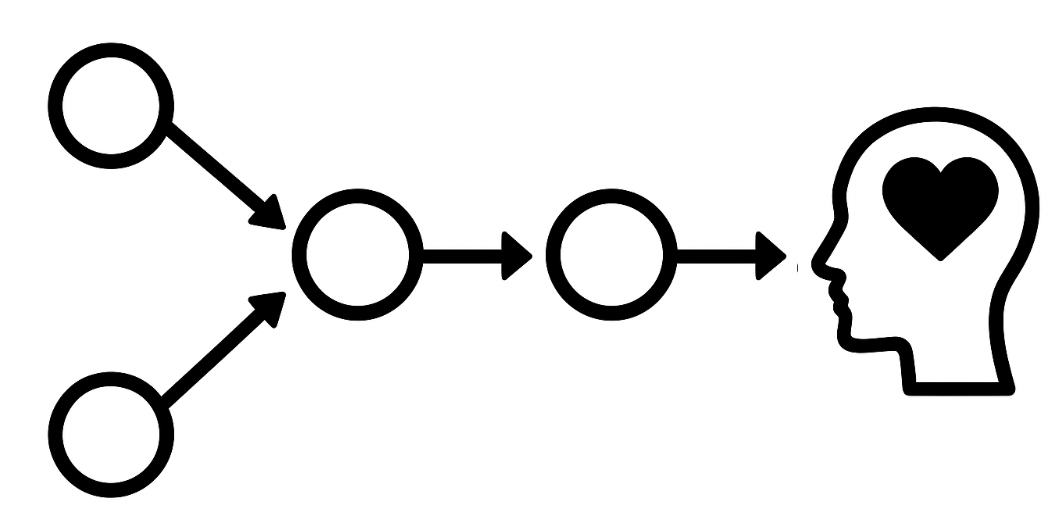

Model Development Life Cycle.

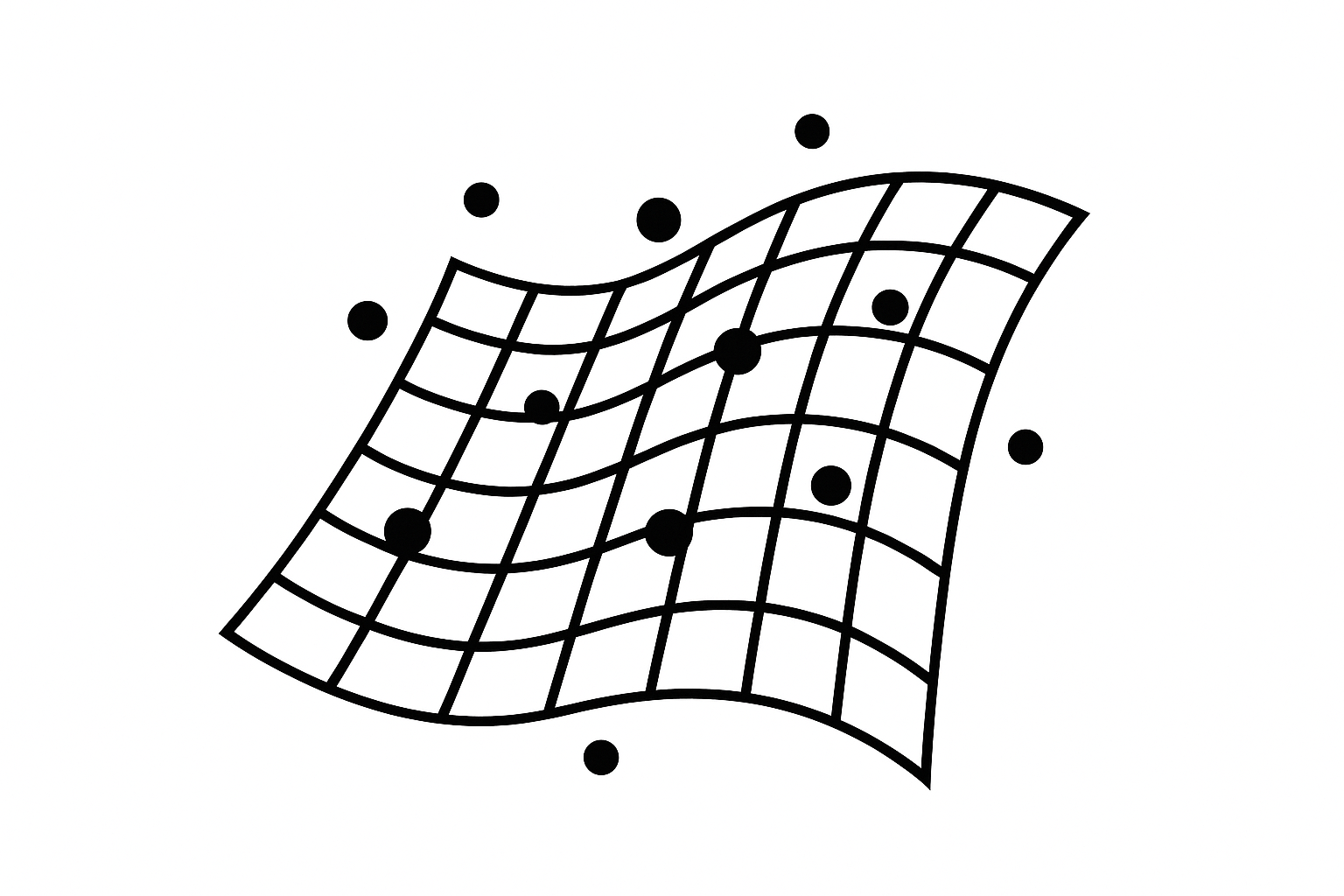

We can use representation engineering at the data layer. Write an agent and deploy it. Representation engineering can be used to check quantities like data complexity, quality, drift, provenance, etc.

Representation engineering can also support how the agent represents the data it is learning.

Representation engineering can align models through unlearning and steering at alignment time.

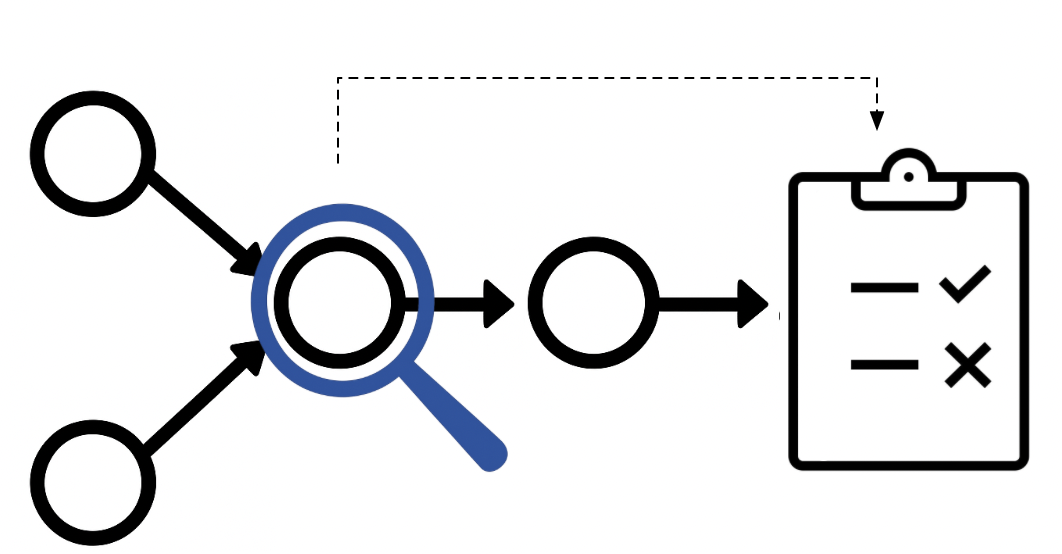

Representation engineering can be used to evaluate model's latent knowledge and beliefs on a task, not just its outputs

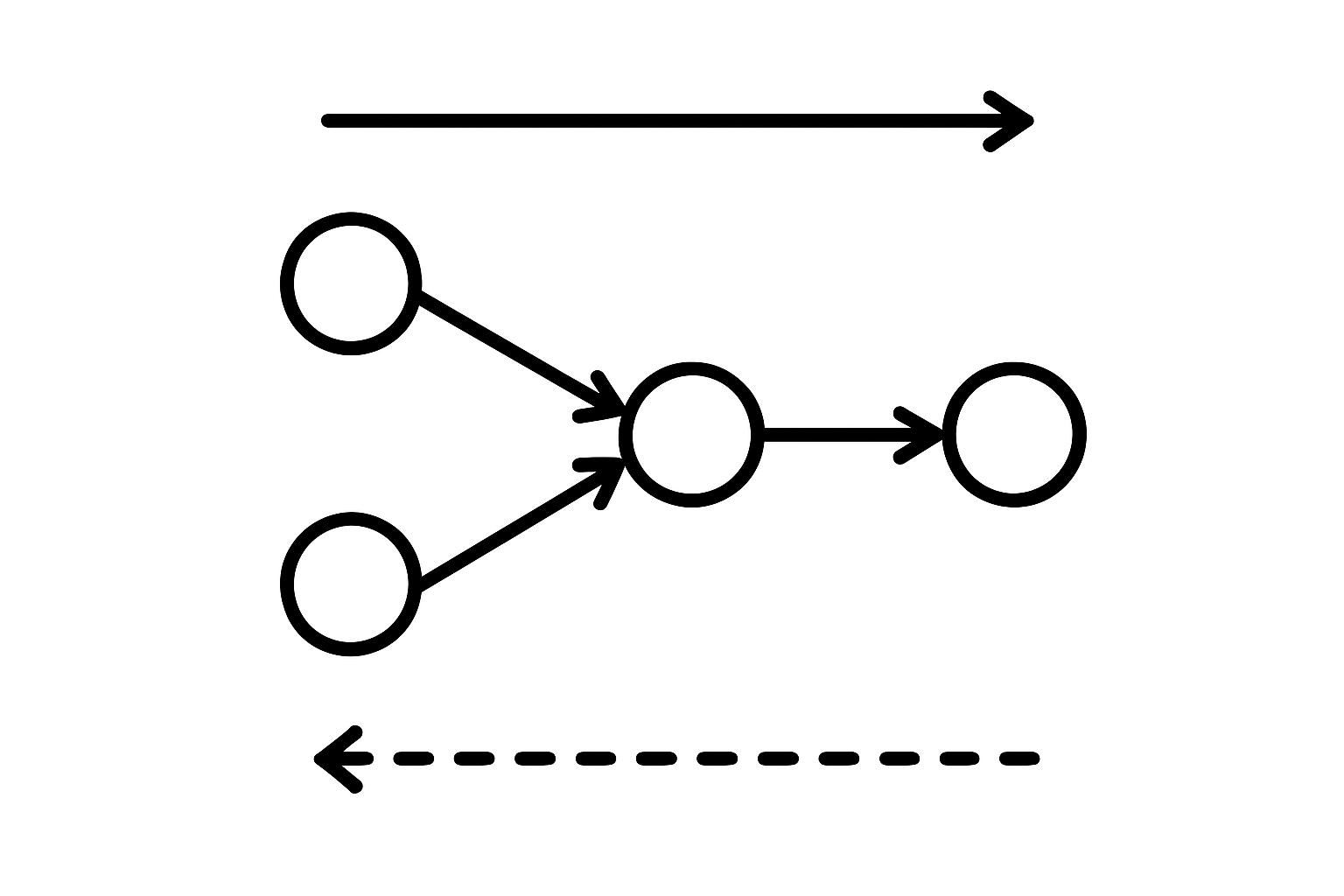

We can use representation engineering as part of TDD in CI/CD pipeline. Imagine you write an agent and deploy it. Representation engineering can be used to check anomalies in the agent's representations to changes made.

Representation engineering can be used to detect, control and steer models at runtime. See our guardrails solution

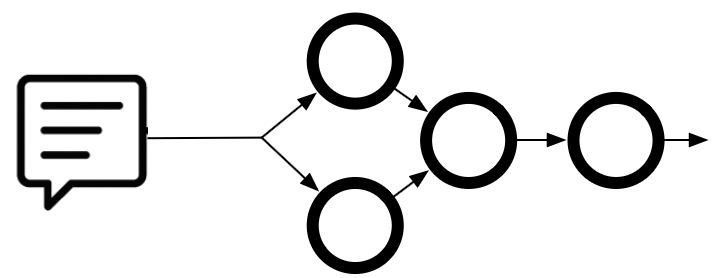

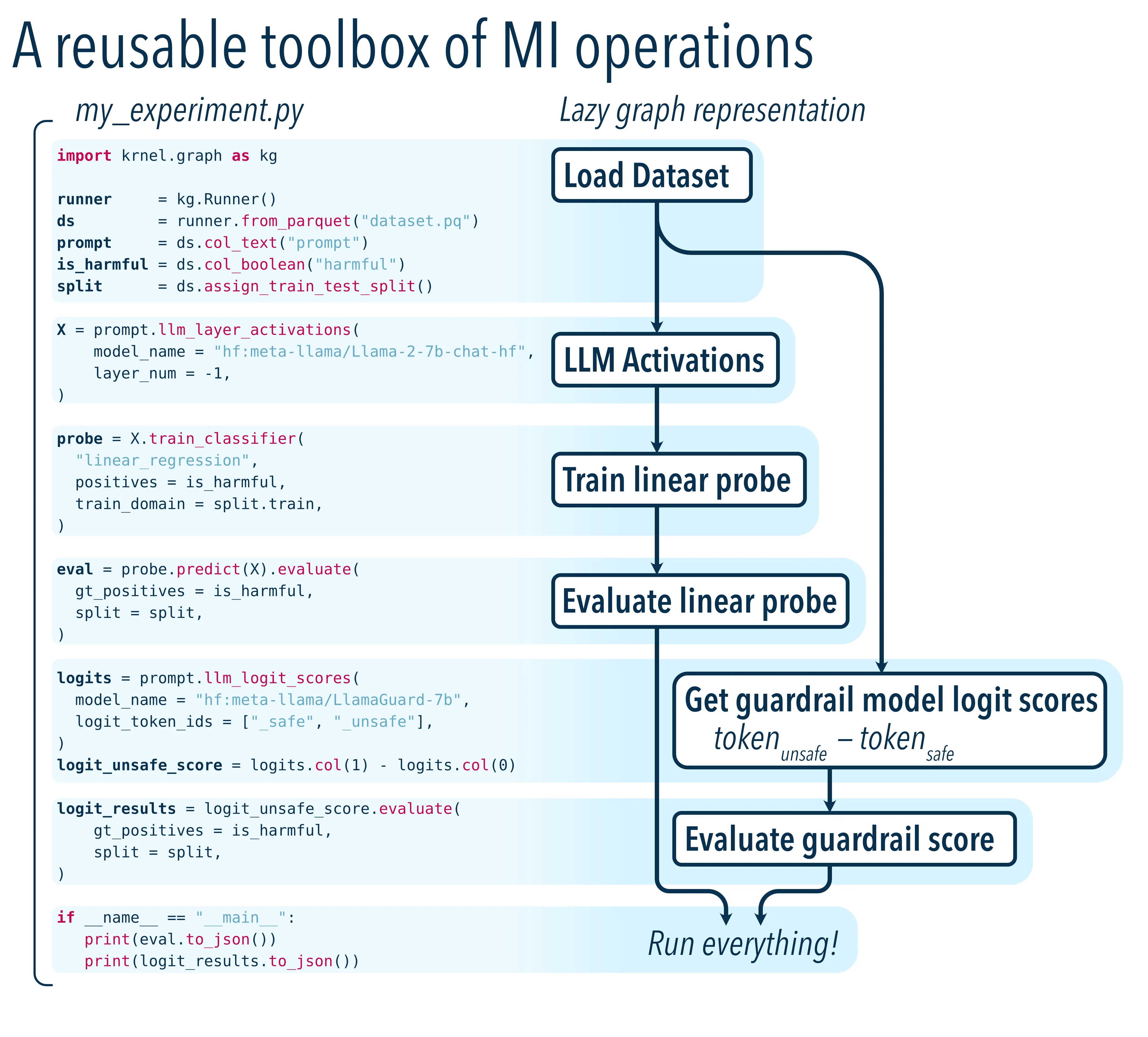

scikit-learn of Representation Engineering

An open framework bringing representation engineering tools we use internally to build reliability and controls into your agent workflows. Build and train probes that consume agent internals to detect your domain specific policies with simple operations. The probes are super fast, accurate, interpretable, and specific to your use cases.

Wrap webhooks for controls or contact us to help you deploy agent native controls without having to provision any more unwanted control infrastructures. Probes trained by Krnel-graph integrate directly into next generation lakehouse infrastructure to enable seamless SIEM/SOAR functionalities too.

Watch our deep dive into how Krnel actively monitors and controls AI agents' thinking to ensure your policies are followed.

Learn how policy neurons work at the model level to detect and enforce compliance in real-time, without external oversight systems.

Be the first to know about our latest research findings and updates. Or email us at info@krnel.ai